You may have heard about WebAssembly out there, right? Not? No problem ... my role here in this blog post is to explain a little about WebAssembly, what it has to do with Serverless and Cloud Native and do a futurology exercise to show you what (in my opinion) will happen (in real, it's already happening) going forward. A lot of things? Well ... first things first ... what the hell is WebAssembly? It feels like there is history .... because this post is long!

The evolution of the web

The web has evolved very fast. For you noble readers to have an idea of how new the web as we know it today, I list below some of the main milestones and their respective dates:

- URL (December - 1994) - tools.ietf.org/html/rfc1738/201

- HTML (June - 1993) - w3.org/MarkUp/draft-ietf-iiir-html-01.txt

- HTTP Protocol (May - 1996) - tools.ietf.org/html/rfc1945/201

We are talking about things that are common to us today like URL, HTTP, HTML and other jargon that is in the "people's mouth" having their specifications being made in the early 90's.

Over time, the use of web applications has become increasingly common. At the beginning we had applications running on a browser and after a few years on our smartphones . Nowadays a very widespread concept is the mobile first which says that the initial focus of the architecture and development of an application should be directed primarily to mobile devices.

Javascript

When we hear about Javascript this programming language almost automatically becomes synonymous with web . I say this because it was created by Brendan Eich (today Co-Founder and CEO of Brave ) who was hired by Netscape at the time to develop a script language for your browser Netscape Navigator . Brendan at first thought of embedding the language Scheme (a LISP dialect) in the browser but soon Netscape's management opted to create a new language with a syntax "similar" to Java (which was very successful at the time) . They needed a prototype of the new language, which Brendan developed in just 10 days. Thus was born Javascript a language loved by some hated by others but with undeniable importance and protagonism in the scenario of web applications until today: About 96.8% of websites uses Javascript w3techs.com/technologies/details/cp-javascr..

Curiosity: Did you know that the brand "Javascript" belongs to Oracle? (tsdr.uspto.gov/#caseNumber=75026640&cas..)

In November 1996, Netscape submitted Javascript to ECMA International ( European Computer Manufacturers Association ) as a first starting point towards its standardization. The first language specification in June 1997 was released as ECMAScript. All companies that provide a web browser ( Apple, Google, Mozilla, Microsoft, Brave, Opera, etc. ) can follow this same specification and thus have their browsers compliant with the Javascript language. This standardization of ECMA is very important because it ends with that story of each vendor (supplier) inventing to have his "own version of Javascript " . The same code Javascript that I write will execute in Safari, on Chrome, Firefox, Edge, and so on. Imagine having to write a different Javascript code for each type of browser ? It wouldn't be pleasant ...

The web has evolved so much that today it is possible to run Unreal Engine 4 in the browser . See the video below for an example of this happening:

You may be asking yourself: How is this possible?

asm.js

There is a subset (smaller set) of Javascript called asm.js created in 2013. The idea is to allow software written in languages like C for example, to run as web applications while maintaining some features like performance , better than the standard Javascript. All this "magic" is achieved thanks to Emscripten which is a source-to-source compiler that runs as the LLVM's backend and produces this subset from Javascript known as asm.js.

In short: If your software was originally designed and written to run as an executable, with asm.js you can integrate it with your web client-side application.

But is it too slow? Not because asm.js can be compiled by browsers in a process called ahead-of-time optimization which allows more speed and performance. Not to mention that asm.js was supported by many browsers which made it an interesting alternative to Google NaCi (Native Client) that until then only ran on Chrome.

Google NaCi (Native Client)

Google NaCi (Native Client) is a sandbox technology that allows you to run native code securely from within a web browser regardless of the client's operating system. This allows web applications running in this context to perform almost natively. This technology was also used in Chrome OS.

An interesting use of Google NaCi (developer.chrome.com/native-client) can be seen in the Go Playground (play.golang.org) where you can execute Go code directly from your browser. User code execution is done safely and isolated from Google’s infrastructure. Google NaCi limits the amount of CPU and RAM a program can consume and prevents them from accessing the network or file system of the infrastructure that is running them.

However, as everything in our area changes or evolves very quickly, in May 2018 Google introduced gVisor, a second generation of sandbox technology.

gVisor

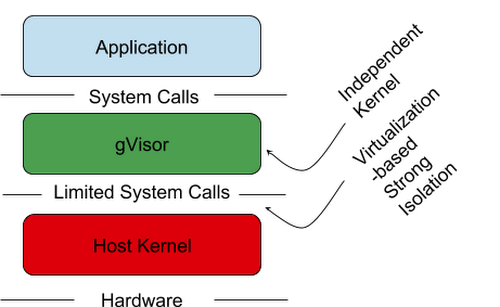

GVisor is a sandbox technology developed by Google that focuses on security, efficiency and ease of use. Implements around 200 syscall of Linux in userspace as opposed to Docker which runs directly on the Linux kernel and is isolated via namespaces. GVisor is written in Go which prevents common pitfalls that often occur with software written in C, for example. It is widely used in production at Google on products like AppEngine, Cloud Functions, Cloud Run and GKE (Google Kubernetes Engine).

Although containers have revolutionised the way we develop, package and deploy our applications, there is a great concern with security when we talk about this type of environment. Many people think that Docker brings the necessary security by default and run software on this platform without worry. However, we know that if you download images Docker without knowing the source, running applications as root in Docker, among others, is the gateway to a series of security problems in your environment.

As they once said:

"Docker is about running random code downloaded from the Internet and running it as root."

Do you want something more dangerous than that? So we must assume that the Docker and * Linux kernel will NOT protect us from malware or attacks.

Below I leave an excerpt from the article Are Docker containers really secure? written by Daniel J Walsh:

So what is the problem? Why don't containers contain?

The biggest problem is everything in Linux is not namespaced. Currently, Docker uses five namespaces to alter processes view of the system: Process, Network, Mount, Hostname, Shared Memory.

While these give the user some level of security it is by no means comprehensive, like KVM. In a KVM environment processes in a virtual machine do not talk to the host kernel directly. They do not have any access to kernel file systems like /sys and /sys/fs, /proc/.

Device nodes are used to talk to the VMs Kernel not the hosts. Therefore in order to have a privilege escalation out of a VM, the process has to subvirt the VM's kernel, find a vulnerability in the HyperVisor, break through SELinux Controls (sVirt), which are very tight on a VM, and finally attack the hosts kernel.

When you run in a container you have already gotten to the point where you are talking to the host kernel.

Major kernel subsystems are not namespaced like:

SELinux, Cgroups, file systems under /sys /proc/sys, /proc/sysrq-trigger, /proc/irq, /proc/bus

Devices are not namespaced: /dev/mem, /dev/sd file system devices, Kernel Modules

If you can communicate or attack one of these as a privileged process, you can own the system.

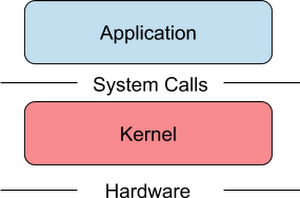

Applications that run on traditional Linux containers are not sandboxes as they make system calls directly to the host kernel where they are running:

Source: cloud.google.com/blog/products/gcp/open-sou..

Source: cloud.google.com/blog/products/gcp/open-sou..

The kernel imposes some limits on the resources that the application can access and these limits are implemented through cgroups and namespaces but not all resources can be controlled via these mechanisms. You can use a seccomp filter but then you have to create a pre-defined list of system calls. In practice you will have to know which system calls your application will call.

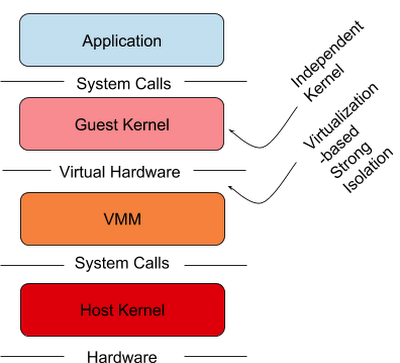

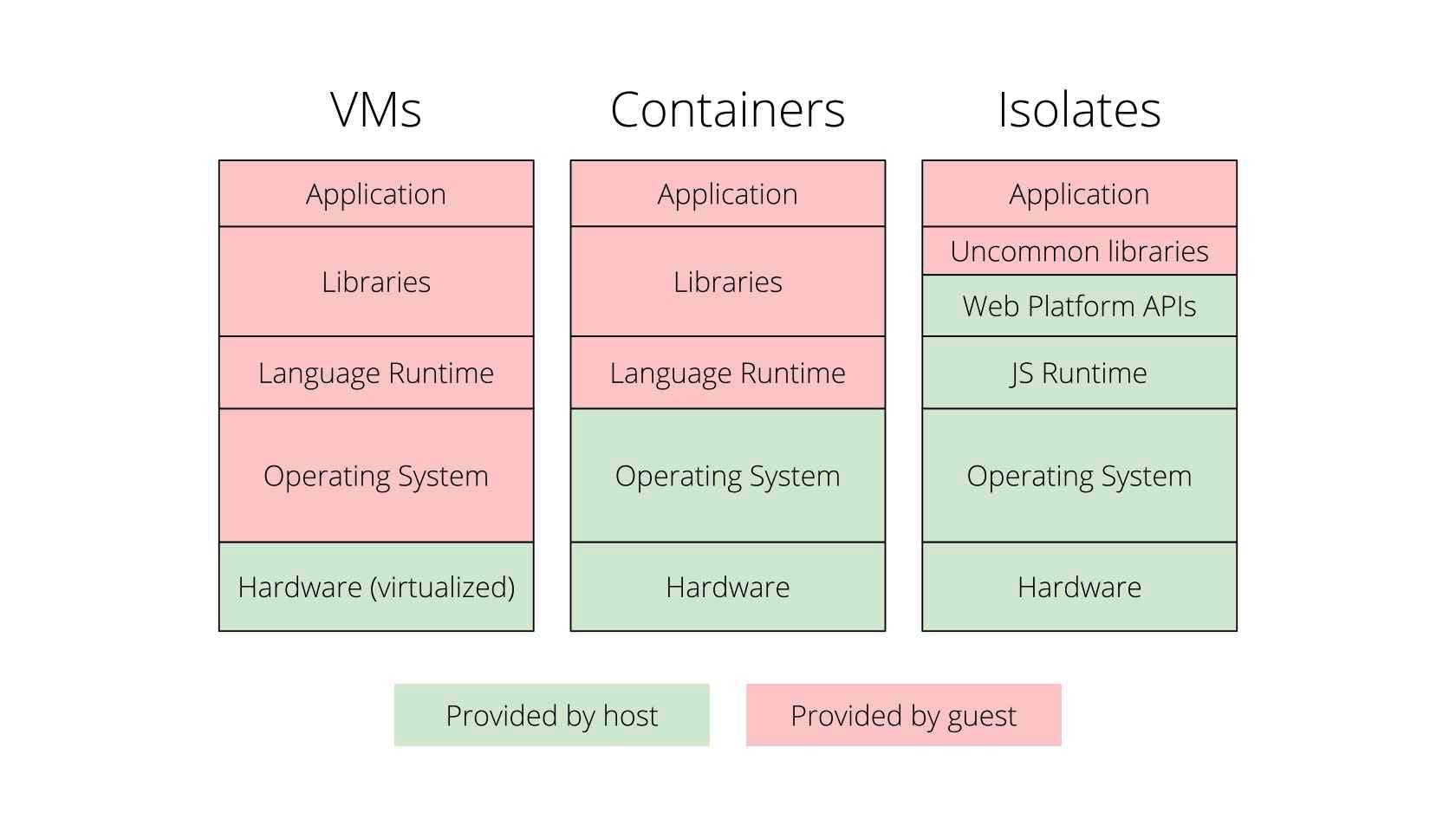

One way to increase the isolation of the containers is to run each container in its own virtual machine. In this case, the running container will have its own kernel , its own virtualized devices , among other things, completely separate from the host running it.

Source: cloud.google.com/blog/products/gcp/open-sou..

Source: cloud.google.com/blog/products/gcp/open-sou..

It is a valid approach but with a high footprint. Initiatives like Kata containers follow this same approach but keep the VM (Virtual Machine) simplified to obtain a minimum footprint and maximize the performance of container isolation.

GVisor is lighter than a VM (Virtual Machine) and maintains a similar level of isolation. For that, gVisor has a kernel written in Go that can make calls to most system calls of Linux.

Source: cloud.google.com/blog/products/gcp/open-sou..

Source: cloud.google.com/blog/products/gcp/open-sou..

We can then infer that gVisor runs containers with an extra layer of insulation. With the use of gVisor we decrease the direct interaction between the container and the host and consequently we decrease the attack surface.

Sandbox

Have we seen technologies like gVisor , Google NaCi , seccomp, Docker , cgroups and namespaces , Virtual Machines , Kata Containers , etc? All cited so far deal in one way or another with something in common: isolation . Add to this list also SELinux , AppArmor , Nabla Containers , among others. Who remembers Microsoft's Silverlight there? It was a micro version of the .NET Framework that allowed applications to run in a sandbox within the browser .

We can see the sandbox as a special layer of protection. With the use of a sandbox , we concentrate the operations performed inside it in a restricted area, where any unreliable code can execute completely isolated from the operating system.

When executing a workload serverless for example, it will inevitably run a sandbox , isolated from the host of the server that runs it.

Serverless

With the advent of serverless computing, cloud service providers (Google, AWS, Microsoft, etc.) deploy your application code and manage the allocation of resources needed to run your software in a way dynamics. This prevents the developer from worrying about infrastructure management.

To achieve this goal described above, among other things, cloud providers use a variety of virtualization platforms such as the aforementioned Google gVisor and AWS Firecracker , a Micro-VM that is one of the secrets behind of the speed and performance of the famous Lambda Functions . All of these platforms, as previously mentioned, use an isolated environment to perform their computational workloads .

When we use some Serverless offer that exists on the market today, one thing we have to deal with is the cold-starts . I will not explain in detail what it is about, but in summary: it is the time it takes to initialize the process that executes your code.

Is there any way to escape from cold-start ? Let's see ...

Edge Computing

Another term that is quite fashionable. In a simplified way, we can say that Edge Computing is a network of micro datacenters to process data locally, that is, at the edge of the network, instead of sending it to the cloud . In this way, only the most relevant data goes through the network so to speak. This makes it easier for users to access your files, for example, and latency decreases because the content is stored closer to the source.

CDN companies like Cloudflare and Fastly started to think: What if we let people run code on our servers instead of just caching assets (images and etc.)? Fastly Terrarium and Cloudflare Workers allow you to run your code (in WebAssembly format) on edge *, on a server somewhere in the world.

There is a Cloudflare platform called Workers . And unlike many existing cloud platforms, Workers does not use containers or VMs ( Virtual Machines ). How do they do this?

Well, there is a technology created by the Google Chrome team called V8: Isolates . These are small contexts that allow a single process to run thousands of " Isolates ". This makes it possible to run "untrusted" code from different clients in a single process on the operating system. Isolates start very quickly and do not allow an Isolate to access the memory of another Isolate , for example.

One of the disadvantages of using Isolates is that you are unable to run any binary compiled on it unlike Lambda . You must write your code in Javascript or in a language that compiles for WebAssembly such as Rust, Go, C or C ++, .NET among others. There is a lot of work being done so that more and more languages compile for WebAssembly . The full list you can see here (github.com/appcypher/awesome-wasm-langs)

Source: infoq.com/presentations/cloudflare-v8

Source: infoq.com/presentations/cloudflare-v8

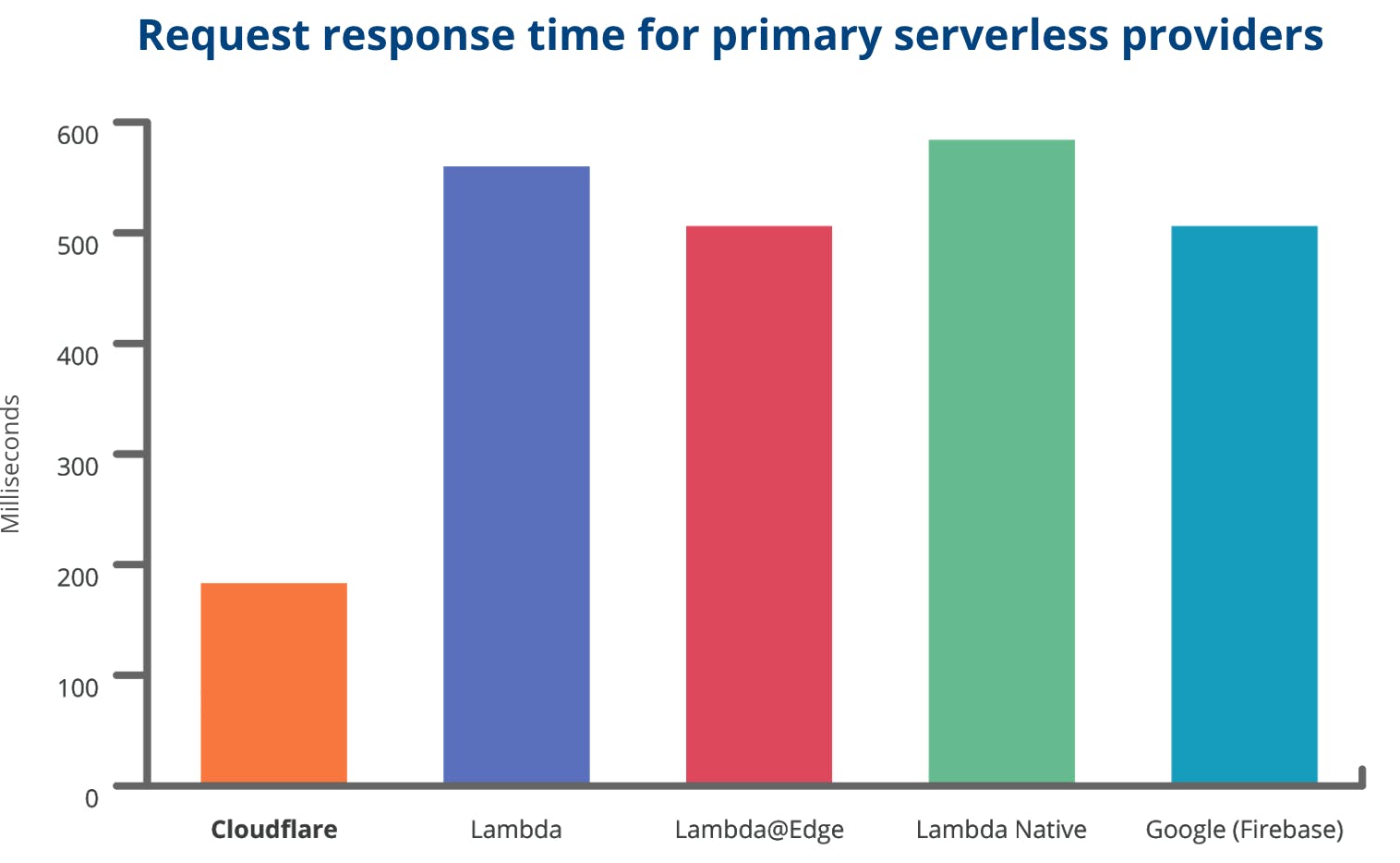

Below is a comparison of some available Serverless options:

Source: blog.cloudflare.com/cloud-computing-without..

Source: blog.cloudflare.com/cloud-computing-without..

The big advantage with using Cloudflare Workers is that they don't have to start a process like Lambda does. Isolates starts in 5 milliseconds, almost imperceptibly. We have isolation and scalability much faster than the Serverless offerings that the chart above compared.

Did you notice that we are talking again about isolation? Even because the name V8: Isolates doesn't let me lie. =)

Cloudflare Workers was initially created to allow deploy Javascript code directly to datacenters (or PoPs - Points of Presences) around the world. And until 2018 you could only use Javascript as stated above. Using WebAssembly it is now allowed to run a range of languages that compile for WebAssembly. Phew ... we finally arrived at the so-called WebAssembly .

WebAssembly

WebAssembly - usually abbreviated as "WASM" - is a technology that extends the web platform to support compiled languages such as C, C ++, Rust, Go among others. These languages can be compiled in a binary WASM format used in a browser for example.

The definition of the official WebAssembly website is:

WebAssembly (abbreviated Wasm) is a binary instruction format for a stack-based virtual machine.

The main features of WebAssembly are:

- Binary format instruction

- stack-based VM

- Portability

A stack-based virtual machine is an abstraction from a computer that emulates a real machine. Usually such a VM is built as an interpreter of some special type of bytecode that translates it into real time for execution on the CPU (JIT - Just In Time). Some examples of virtual machines based on stack: JVM (Java Virtual Machine) and the CLR (Common Language Runtime) of .NET.

With regard to portability, the main objective is that you write once and execute your code anywhere. Wait .... I've heard that in the past 🤔

Write Once, Run Anywhere

Write Once, Run Anywhere

It doesn't matter which CPU, which operating system, which architecture you will run your code on because it is a standardised binary format and the host running it takes care of that.

So how is WebAssembly different from all of these sandbox technologies already presented? Some reasons below:

- W3C standard (not a proprietary technology that belongs to a single company)

- Supports multiple languages (Go, Rust, C, C ++, etc.)

- Integration with Javascript

- Efficient (because of the binary format it is very light)

WebAssembly has become the fourth language to run natively in browsers after HTML, CSS and Javascript. All the most used browsers already support its use:

- Brave

- Chrome

- Edge

- Firefox

- Opera

- Safari

Now I'm going to use WebAssembly instead of Javascript for everything? Of course not ... after all WebAssembly is not a silver bullet. WebAssembly shines your eyes when we need to perform an operation that consumes a lot of resources such as resizing images, audio processing, etc. These applications require a lot of mathematical operations and careful memory management. While it is possible to perform these tasks in pure JavaScript - and mechanisms like V8 have made impressive efforts to optimize this code - in the end, nothing beats a language compiled with static types and explicit allocation.

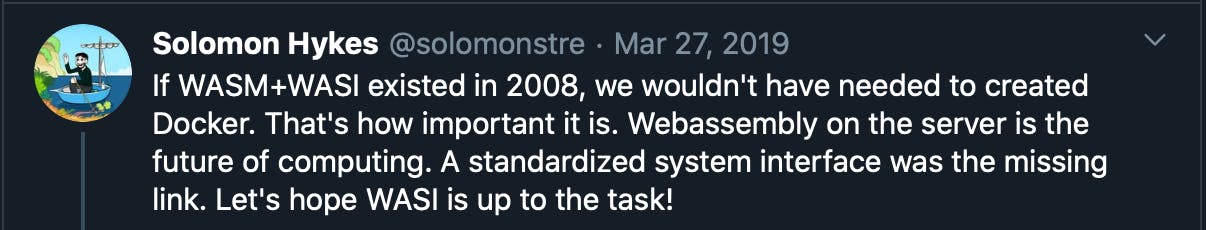

The importance of WebAssembly is so great that the creator of Docker did the following tweet:

twitter.com/solomonstre/status/111100491322..

twitter.com/solomonstre/status/111100491322..

Remember that we talked about the JVM (Java Virtual Machine) earlier? Look what Solomon Hykes creator of Docker wrote about:

twitter.com/solomonstre/status/111113155976..

twitter.com/solomonstre/status/111113155976..

This makes it clear again that having an ubiquitous runtime is not a new desire. A long time ago we can see several initiatives to achieve this.

However, the use of WebAssembly is not limited to browsers . We have already managed to run WebAssembly on the server side. There are already a number of runtimes to run WebAssembly in this context:

- wasmtime

- wasmer

- lucet

- wasm3

- eos-vm

- wasm-micro-runtime

Each with its different areas of focus: some runtimes for blockchain applications, others targeting performance and so on.

And this is where it starts to get interesting ....

WASI (WebAssembly Systems Interface)

Developers started using WebAssembly in addition to browser , because it provides a fast, scalable and secure way to run the same code on any machine.

For this, the code needs to communicate with the operating system of the machine in question. And to carry out this communication, a system interface is required.

Remembering that WebAssembly is an assembly language for a CONCEPTUAL machine, not a real machine. Because of this, WebAssembly can run on different physical machine architectures.

And since WebAssembly is an assembly language for a conceptual machine, it also needs a system interface for a conceptual operating system, not just for a single operating system like only Linux or only Windows. That way WebAssembly can run on different operating systems.

That is why WASI (WebAssembly Systems Interface) was created - a system interface for the WebAssembly platform.

In summary, with WASI developers will not need to port (recompile) their code to each different platform out there.

With the introduction of this new abstraction layer, your code can be compiled for WebAssembly and run on any platform that supports the WASI standard. The real "write once (or compile once), run anywhere".

Cloud Native

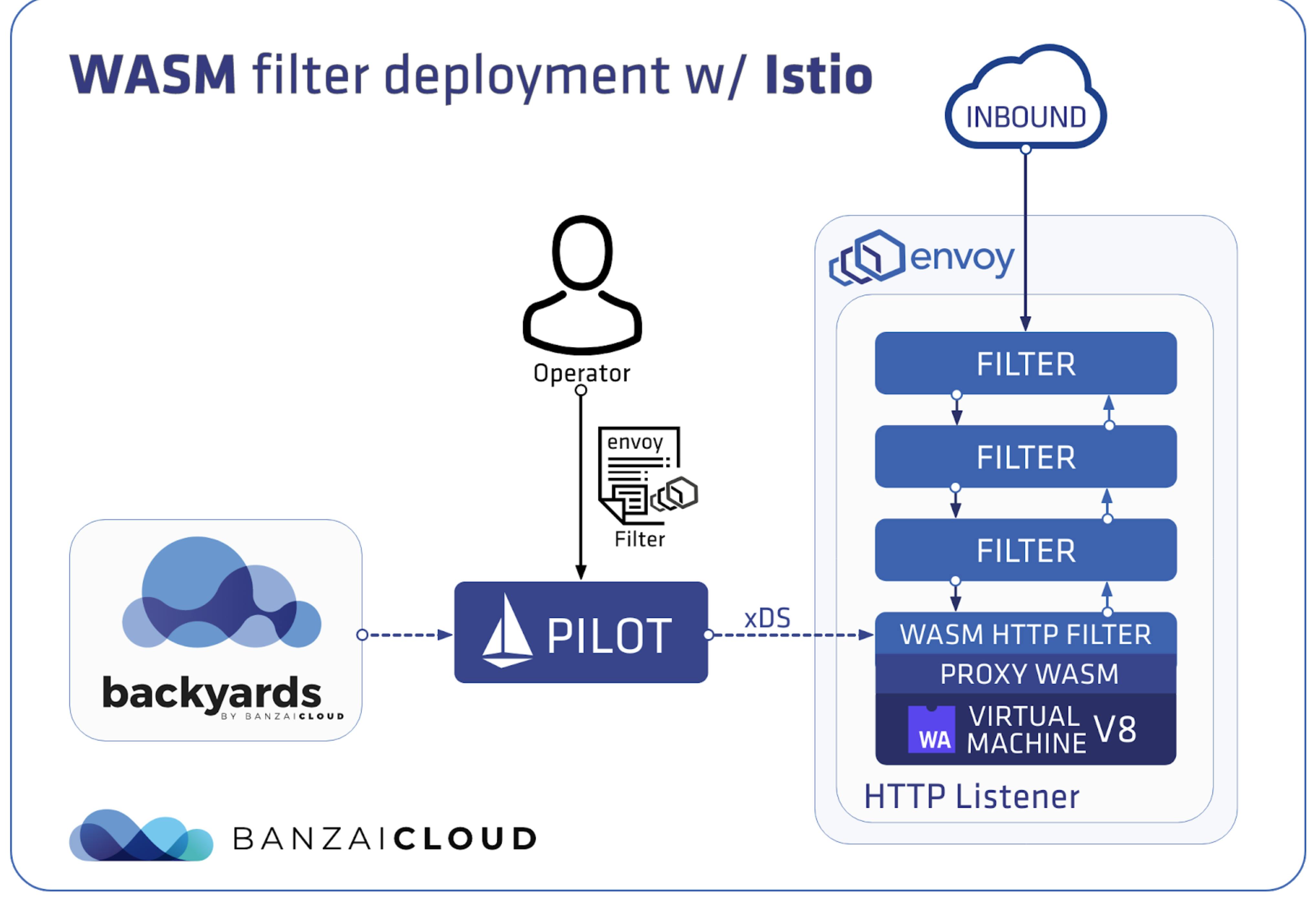

The use of WebAssembly is so vast that it has reached the Cloud Native world. One of the pioneers to adopt the use of WebAssembly was the Envoy proxy.

Envoy integrated a WebAssembly Virtual Machine in order to avoid having to recompile Envoy. You can link your WebAssembly module to Envoy without having to compile Envoy again. So you reach extensibility more easily.

Filters that can handle a request in Envoy. With WebAssembly you can build your own filters in WebAssembly without having to recompile Envoy.

It is very worthwhile to check out the work that the solo.io people are doing regarding the use of WebAssembly in Envoy . They created WebAssembly Hub, a place where people can post and share their own wasm extensions for Envoy with others.

Another very interesting project is the Krustlet. A virtual kubelet written in Rust made by the staff of DEIS Labs a division of Microsoft. The purpose of Krustlet is to make it easy to run WebAssembly workloads on Kubernetes. And they also wanted to show the community that they could write parts of Kubernetes (which is written in Go) in other languages (like Rust).

It is important to remember that both Krustlet and WASI are projects in the experimental phase, but there is much promise in the combination of these two technologies. You give Kubernetes access to a second sandbox and gain an extra option to distribute your application that can now run outside the datacenter, in an edge environment.

The future

WebAssembly is a very new technology. It reached the main browsers in 2017 (just under 3 years). The team working on it is very concerned about backward compatibility, which means that the WebAssembly you create TODAY will continue to work in browsers in the future.

The main idea is that you can run precompiled code written in any language on the web and any other platform.

With WASI a new way of writing applications opens - with any language, that runs anywhere (platform) and that speaks to each other fluently without any wrapper or network calls.

Will WASM replace Javascript?

Not in the near future. It will co-exist. WASM cannot yet handle the DOM for example. No application is going to be rewritten in wasm for now. But in the future who knows, new applications will be written completely in WASM (using a language like Go, Rust, etc.).

Will WASM replace Docker?

Then I let the creator of Docker answer that:

I am not an expert in WebAssembly, but I am excited about the possibilities that this brings us to the future. I hope you are excited too!

Bibliography:

A Brief Story of Javascript - speakingjs.com/es5/ch04.html

Open-sourcing gVisor, a sandboxed container runtime - cloud.google.com/blog/products/gcp/open-sou..

asm.js - asmjs.org

gVisor - gvisor.dev

Are Docker containers really secure? - opensource.com/business/14/7/docker-securit..

Updates in container isolation - lwn.net/Articles/754433

Container Isolation at Scale (Introducing gVisor) - youtube.com/watch?v=pWyJahTWa4I

Cloud Computing without Containers - blog.cloudflare.com/cloud-computing-without..

Fine-grained Sanboxing with V8 Isolates - infoq.com/presentations/cloudflare-v8

Redefining extensibility in proxies - introducing WebAssembly to Envoy and Istio - istio.io/latest/blog/2020/wasm-announce